Hi,

I am pretty new to using pollination cloud. I want to automatize a feedback loop workflow so models are sent for simulation as they are generated, and their results are downloaded as soon as they are available. My first attempt to do this using streamlit was unsuccessful, and I do not know what the problem is. I have used this topic as guide. My questions are the following:

- What am I doing wrong?

- Is it possible to send a honeybee model directly to the cloud without having to previously save it as a hbjson on a local drive? It is not a big deal for my project, but maybe it is possible.

- What is the process to automatically receive the results from the simulation on Python as soon as they are available?

Thanks for your help.

api_key = 'my_key'

assert api_key is not None, 'You must provide valid Pollination API key.'

# project owner and project name - change them to your account and project names

owner = 'my_username'

project = 'demo'

api_client = ApiClient(api_token=api_key)

# We assume that the recipe has been added to the project manually

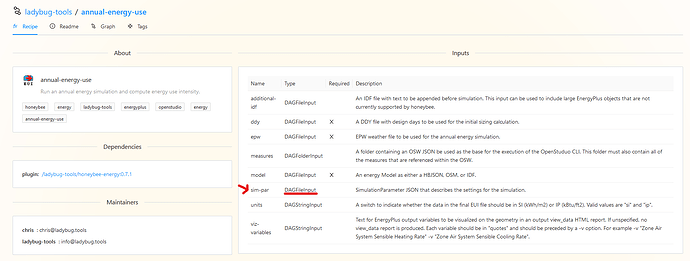

recipe = Recipe('ladybug-tools', 'annual-energy-use', '0.5.3', client=api_client)

# Import EPW and DDY files

# set default values

_percentile_ = 0.4

epw = EPW(f"my/epw/file/address")

out_path = os.path.join(f"my/out/file", 'ddy')

ddy_file = epw.to_ddy(out_path, _percentile_)

# for files and folder we have to provide the relative path to

recipe_inputs = {

'ddy': ddy_file,

'epw': epw,

'model': None, # This changes with iterations

'add_idf': None,

'measures': None,

'sim_par': None,

'units': None,

'viz_variables': None

}

# Save models as hbjsons

hbfolder = f"my/hbjson/folder"

for model_nr in range(len(my_models)):

my_models[model_nr].to_hbjson(name = "model" + str(model_nr), folder=hbfolder)

# create a new study

new_study = NewJob(owner, project, recipe, client=api_client)

new_study.name = 'Parametric study submitted from Python'

study_inputs = []

for model in pathlib.Path(hbfolder).glob('*.hbjson'):

inputs = dict(recipe_inputs) # create a copy of the recipe

# upload this model to the project

# It is better to upload the files to a subfolder not to overwrite other files in

# the project. In this case I call it dataset_1.

# you can find them here: https://app.pollination.cloud/ladybug-tools/projects/demo?tab=files&path=dataset_1

uploaded_path = new_study.upload_artifact(model, target_folder='dataset_1')

inputs['model'] = uploaded_path

inputs['model_id'] = model.stem # I'm using the file name as the id.

study_inputs.append(inputs)

# add the inputs to the study

# each set of inputs create a new run

new_study.arguments = study_inputs

# # create the study

running_study = new_study.create()

job_url = f'https://app.pollination.cloud/{running_study.owner}/projects/{running_study.project}/jobs/{running_study.id}'

print(job_url)

time.sleep(5)

status = running_study.status.status

while True:

status_info = running_study.status

print(f'\t# ------------------ #')

print(f'\t# pending runs: {status_info.runs_pending}')

print(f'\t# running runs: {status_info.runs_running}')

print(f'\t# failed runs: {status_info.runs_failed}')

print(f'\t# completed runs: {status_info.runs_completed}')

if status in [

JobStatusEnum.pre_processing, JobStatusEnum.running, JobStatusEnum.created,

JobStatusEnum.unknown

]:

time.sleep(30)

running_study.refresh()

status = status_info.status

else:

# study is finished

time.sleep(2)

break

I receive the following message

WARNING streamlit.runtime.caching.cache_data_api: No runtime found, using MemoryCacheStorageManager

Traceback (most recent call last):

File ~\AppData\Local\anaconda3\lib\site-packages\spyder_kernels\py3compat.py:356 in compat_exec

exec(code, globals, locals)

File \pollination_all_external.py:2715

running_study = new_study.create()

File ~\AppData\Local\anaconda3\lib\site-packages\pollination_streamlit\interactors.py:165 in create

qb_job = self.generate_qb_job()

File ~\AppData\Local\anaconda3\lib\site-packages\pollination_streamlit\interactors.py:174 in generate_qb_job

arguments = self._generate_qb_job_arguments()

File ~\AppData\Local\anaconda3\lib\site-packages\pollination_streamlit\interactors.py:200 in _generate_qb_job_arguments

run_args.append(JobPathArgument.parse_obj({

File pydantic\main.py:526 in pydantic.main.BaseModel.parse_obj

File pydantic\main.py:341 in pydantic.main.BaseModel.__init__

ValidationError: 7 validation errors for JobPathArgument

source -> type

string does not match regex "^HTTP$" (type=value_error.str.regex; pattern=^HTTP$)

source -> url

field required (type=value_error.missing)

source -> type

string does not match regex "^S3$" (type=value_error.str.regex; pattern=^S3$)

source -> key

field required (type=value_error.missing)

source -> endpoint

field required (type=value_error.missing)

source -> bucket

field required (type=value_error.missing)

source -> path

str type expected (type=type_error.str)